What if you could give your car commands?

For many car enthusiasts, the idea of interacting with a vehicle through voice commands was once the stuff of science fiction. We still remember the 1980s television series Knight Rider, where Michael Knight (played by David Hasselhoff) teamed up with his intelligent, voice-activated Pontiac Trans Am, K.I.T.T., short for Knight Industries Two Thousand. Together, they fought crime and inspired a generation of engineers and dreamers.

Today, thanks to modern voice technology and advanced automotive platforms like the AutoPi CAN-FD Pro, that dream is closer to reality than ever.

With the AutoPi platform integrated with Google Assistant, it’s now possible to issue spoken commands to your vehicle and receive audio feedback or alerts in return. From triggering functions like rolling down the windows or enabling lights, to receiving audible warnings or status updates, voice interaction can enhance both driver experience and vehicle safety.

This isn’t just a novelty, it’s a practical upgrade. Voice commands can reduce driver distraction, automate routine actions, and provide real-time information while keeping hands on the wheel and eyes on the road.

While we’re still a few steps away from holding full conversations with our cars, the foundational technology is here. With AutoPi, you can build a system where your car listens, responds, and takes action, just like K.I.T.T.

Below is a demonstration showing how this can be achieved using the AutoPi IoT platform and Google Assistant as a voice interface:

What is AutoPi CAN FD Pro?

The AutoPi TMU is a telematics device that plugs into the OBD-II of your car.

Once connected, the TMU device will automatically start working. It has 4G/LTE connectivity, so it is always connected to the Internet. It also comes with a lot of other features, one is continuous GPS tracking.

It comes with an advanced cloud management platform, set up you can log in to from any device. From here you can setup your system and do real-time tracking of your vehicle telematics.

How to use Google Assistant and AutoPi together?

As a new thing, Google recently released their Google Assistant SDK, with Python-based SDK. This SDK lets you integrate the Google Assistant with the AutoPi CAN-FD Pro, and give you the possibility to set up a system where you are able to give commands to your car.

When Google introduced the Google Assistant SDK with a Python version, they must have thought of an AutoPi device, because the two thing goes together perfectly.

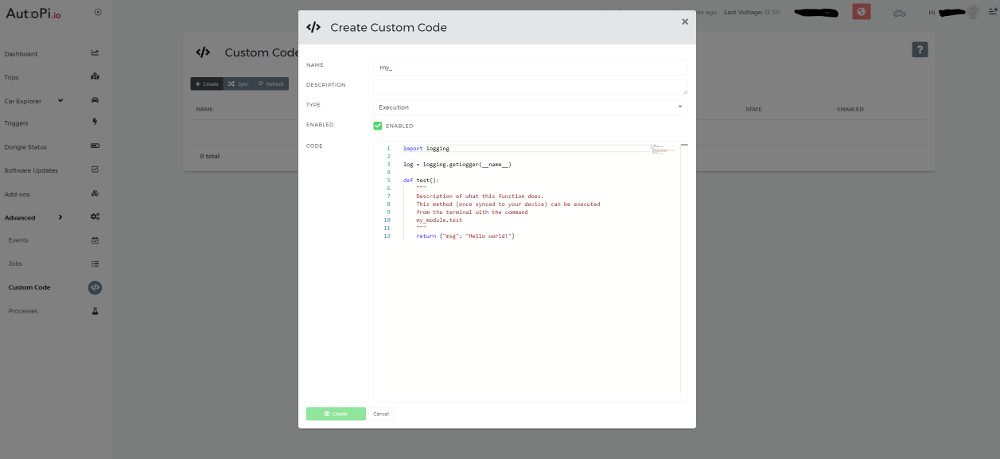

AutoPi Core (the software running on the AutoPi TMU device) is built using Python. With the AutoPi dashboard, it is possible to write custom Python code modules and upload them directly to the AutoPi CAN-FD Pro device.

It is easy to program your AutoPi directly from the web interface and thereby set it up to integrate with Google Assistant.

The AutoPi CAN-FD Pro device comes with a built-in speaker and a connection to your car (or should we call it K.I.T.T.?) using the OBD-II connector. The OBD-II connector lets AutoPi communicate with the embedded computer systems in your car.

The only thing you would need is to add a microphone to AutoPi's USB port.

The main focus would be to set up the software on the device and that's where the Google Assistant SDK can help us. With it, you can parse audio recordings from your device, and turn them into actions. In short this is how it works:

-

Speak a command using the USB microphone

The voice command is captured via the connected USB microphone for further processing. -

Send the recorded action to Google for parsing

The device forwards the audio input to Google’s speech recognition service for interpretation. -

Google sends back a text parsed from the recording

Once processed, Google returns a structured text result representing the user's command. -

The device initiates the action, based on the result from Google

Using the parsed text, the system determines the appropriate response and executes the corresponding action.

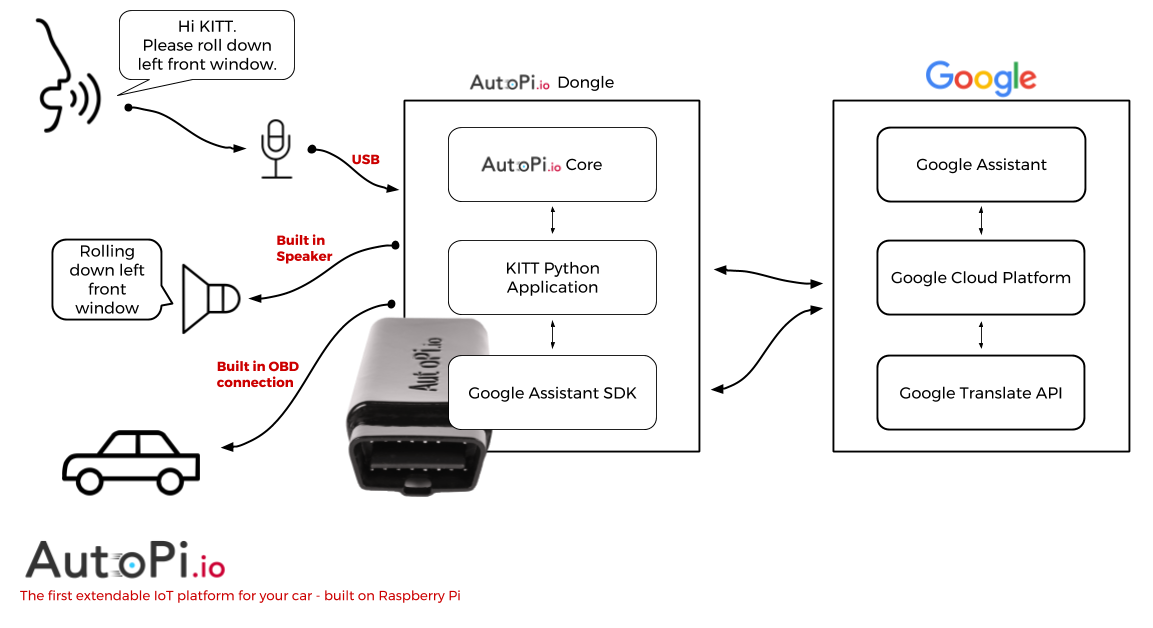

A technical overview of how the communication flows between the user, AutoPi and Google is shown here.

The flow between the systems is:

-

User speaks a command.

The process begins with the user issuing a voice command, activating the voice control system. -

The K.I.T.T. Python application records and sends the spoken command to Google using the Assistant SDK.

The voice input is captured and transmitted via the Google Assistant SDK for interpretation. -

Google Cloud Platform translates the recorded speech into a text string.

The audio is processed by Google’s speech recognition engine, which returns a text-based version of the command. -

The text string is returned to the K.I.T.T. Python application, using the SDK.

The parsed command is sent back to the local application for further handling. -

The K.I.T.T. Python application parses the text string and initiates the action found from the string, in this case the OBD subsystem.

The application interprets the command and determines that it should trigger a vehicle function via the OBD system. -

The OBD subsystem routine is triggered and it sends a command on the CAN bus through the OBD port.

A CAN frame is sent via the OBD2 interface to perform a specific action, such as rolling down the window. -

The window in the car is rolled down.

The vehicle executes the received CAN command, resulting in the physical action taking place. -

The speaker subsystem routine is triggered. It sends the text to be spoken to Google to translate it into a sound file.

The system formulates a spoken response and sends the text to Google’s Text-to-Speech service for audio generation. -

Google returns the sound file.

The generated audio response is received from Google’s cloud service. -

Speaker subsystem plays the sound file to the user.

The system outputs the audio through the connected speaker, completing the interaction. -

Great Success!

The full command cycle is successfully executed, voice in, action completed, and feedback delivered.

This is just a short example of how the K.I.T.T. project would come alive. With Google Assistant in your car, besides making your car talk to you, there are endless possibilities awaiting you.

Other integration possibilities is to have your car respond to actions spoken to a smartphone, when you are away from the car. An example could be to remotely start your car by speech. This would be true Knight Rider style.

AutoPi Featured at Google I/O 2017

AutoPi was showcased at the Google I/O 2017 conference in San Francisco, Google’s annual developer event highlighting cutting-edge technology and real-world applications of its platforms.

During the presentation, AutoPi was featured as a key example of how developers can integrate the Google Assistant SDK into embedded systems to enable voice-controlled interactions with physical devices, in this case, a vehicle. The demonstration highlighted how AutoPi leverages voice recognition, cloud connectivity, and vehicle diagnostics to build smart, interactive automotive solutions.

You can watch the full video below. To see the segment featuring AutoPi, skip ahead to minute 3:35.

Let us know what you would like to have your car do and how you think this could be possible using the AutoPi and Google Assistant.

Recommended by our users

AutoPi is widely used by developers, vehicle integrators, and advanced users who want full control over how they interact with their vehicles. Whether it's voice-activated controls, automated diagnostics, or real-time fleet telemetry, the flexibility of the AutoPi platform allows users to build solutions tailored to their specific needs.

Some of the most common use cases include creating custom voice assistant integrations, monitoring vehicle health remotely, and triggering CAN bus actions based on spoken commands. These projects are made possible thanks to the open architecture, cloud dashboard, and full access to CAN and OBD2 interfaces.

Have questions about what's possible or how to get started? Contact us, our team is ready to help you plan, build, and deploy your custom automotive project. your needs.